Optimized shot-based encodes:

Now Streaming!

By Megha Manohara, Anush Moorthy, Jan De Cock, Ioannis Katsavounidis and Anne Aaron

Bad picture quality — blockiness, blurring, distorted faces and objects — can draw you out of that favorite TV show or movie you’re watching on Netflix. In many cases, low bandwidth networks or data caps prevent us from delivering the perfect picture. To address this, the Netflix Video Algorithms team has been working on more efficient compression algorithms that enable Netflix to deliver the same or better picture quality while using less bandwidth. And working together with other engineering teams at Netflix, we update our client applications and streaming infrastructure to support the new video streams and to ensure seamless playback on Netflix devices.

To improve our members’ video quality, we developed and deployed per-title encoding in 2015, followed by better mobile encodes for downloads a year later. Our next step was productizing a shot-based encoding framework, called Dynamic Optimizer, resulting in more granular optimizations within a video stream. In this article we describe some of the implementation challenges we overcame in bringing this framework into our production pipeline, and practical results on how it improves video quality for our members.

Implementing Dynamic Optimizer in Production

As described in more detail in this blog post, the Dynamic Optimizer analyzes an entire video over multiple quality and resolution points in order to obtain the optimal compression trajectory for an encode, given an optimization objective. In particular, we utilize VMAF, the Netflix subjective video quality metric, as our optimization objective, since our goal is to generate streams at the best perceptual quality.

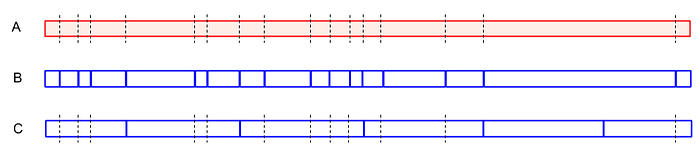

The primary challenge we faced in implementing the Dynamic Optimizer framework in production was retrofitting our parallel encoding pipeline to process significantly more encode units. First, the analysis step for the Dynamic Optimizer required encoding with different resolutions and qualities (QPs), requiring an order of magnitude more complexity. Second, we transitioned from encoding video chunks of about a few minutes long, to video encodes on a per-shot basis. For example, in the original system, a 1-hour episode of Stranger Things results in twenty 3-minute chunks. With shot-based encoding, with an average shot-length of 4 seconds, the same episode requires processing of 900 shots. Assuming each chunk corresponds to a shot (Fig. 1B), the new framework increased the number of chunks by more than two orders of magnitude per encode, per title. This increase exposed system bottlenecks related to the number of messages passed between compute instances. Several engineering innovations were performed to address the limitations and we discuss two of them here: Collation and Checkpoints.

While we could have improved the core messaging system to handle such an increase in message volume, it was not the most feasible and expedient solution at that time. We instead adapted our pipeline by introducing collation.

In collation, we collate shots together, so that a set of consecutive shots make up a chunk. Now, given that we have flexibility on how such collation occurs, we can group an integer number of shots together so that we produce approximately the same 3-minute chunk duration that we produced initially, under the chunk-based encode model (Fig. 1C). These chunks could be configured to be approximately the same size, which helps with resource allocation for instances previously tuned for encoding of chunks a few minutes long. Within each chunk, the compute instance independently encodes each of the shots, with its own set of defined parameters.

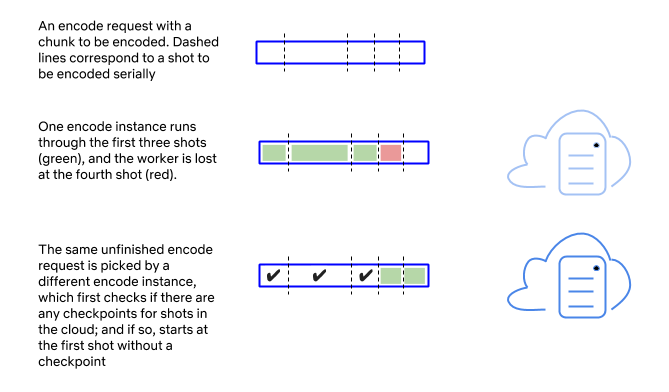

Collating independently encoded shots within a chunk led to an additional system improvement we call checkpoints. Previously, if we lost a compute instance (because we had borrowed it and it was suddenly needed for higher priority tasks), we re-encoded the entire chunk. In the case of shots, each shot is independently encoded. Once a shot is completed, it does not need to be re-encoded if the instance is lost while encoding the rest of the chunk. We created a system of checkpoints (Fig. 2) to ensure that each encoded shot and associated metadata are stored immediately after completion. Now, if the same chunk is retried on another compute instance, encoding does not start from scratch but from the shot where it left off, bringing computational savings.

Compression Performance

In December 2016, we introduced AVCHi-Mobile and VP9-Mobile encodes for downloads. For these mobile encodes, several changes led to improved compression performance over per-title encodes, including longer GOPs, flexible encoder settings and per-chunk optimization. These streams serve as our high quality baseline for H.264/AVC and VP9 encoding with traditional rate control settings.

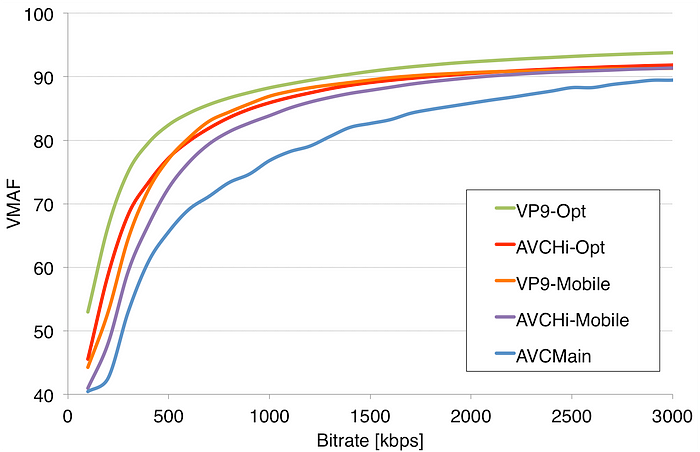

The graph below (Fig. 3) demonstrates how the combination of Dynamic Optimization with shot-based encoding further improves compression efficiency. We plot the bitrate-VMAF curves of our new optimized encodes, referred to as VP9-Opt and AVCHi-Opt, compared to

- Per-chunk encodes for downloads (VP9-Mobile and AVCHi-Mobile)

- Per-title encodes for streaming (AVCMain).

To construct this graph, we took a sample of thousands of titles from our catalog. For each bitrate, x, (on the horizontal axis), and for each title, we selected the highest quality encode (as expressed by a VMAF score) with bitrate ≤ x. We then averaged VMAF values across all the titles for the given x, which provided one point for each curve in the following figure. Sweeping over all bitrate values x, this resulted in 5 curves, corresponding to the 5 types of encodes discussed above. Assuming stable network conditions, this is the average VMAF quality you will receive on the Netflix service at that particular video bandwidth.

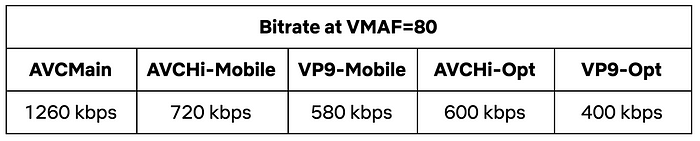

Let’s illustrate the reduction in bitrate at equivalent quality, by drawing a horizontal line at VMAF=80 (good quality), which gives us the following bitrates:

We can see that, compared to per-title encoding with AVCMain, the optimized encodes require less than half of the bits to achieve the same quality. With VP9-Opt, we can stream the same quality at less than one third of the bits of AVCMain. Compared to AVCHi-Mobile and VP9-Mobile, we save 17% and 30%, respectively.

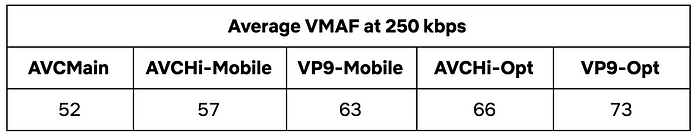

We also examine how visual quality is impacted given the same bandwidth. For example, an average cellular connection bandwidth of 250 kbps, results in the average VMAF values shown in the table below. The optimized encodes provide noticeably better video quality than AVCMain.

To illustrate the difference in visual quality, the example below shows a frame from a Chef’s Table episode, taken from different encodes with approximately 250 kbps bitrate. Immediately noticeable is the increased quality in textures (bricks, trees, rocks, water etc). A visually noticeable difference is observed between AVCMain (Fig. 4A, VMAF=58) and AVCHi-Opt (Fig. 4B, VMAF=73). The VP9-Opt frame (Fig. 4C, VMAF=79) looks sharpest.

In the following example, we show a detail of the opening scene of 13 Reasons Why, at approximately 250 kbps. For AVCMain (Fig. 5A), the text at the top is hardly legible, deserving a VMAF value of 60. For AVCHi-Opt (Fig. 5B), we see a large jump in quality to a VMAF value of 74. For VP9-Opt (Fig. 5C), the text and edges become crisp, and we get another noticeable increase in quality, which is also reflected in the VMAF value of 81.

Testing Optimized Encodes in the Field

In the previous section, we illustrated that optimized encodes offer significantly higher compression efficiency than per-title encodes, leading to higher quality at a comparable bitrate, or lower bitrate at the same quality. The question remains whether this translates into an improved experience for our members.

Before deploying any new encoding algorithm in production, we thoroughly validate playability of the streams using A/B testing on different platforms and devices. A/B testing provides us with a controlled way to compare the Quality of Experience (QoE) of a treatment cell (our new encodes), to the control cell (existing experience). We ran A/B tests on a wide range of devices and titles to compare our optimized encodes against the existing AVCMain streaming experience. This also allowed us to fine-tune our encoding algorithms and adaptive streaming engine for different platforms.

We assessed the impact of optimized encodes on different QoE metrics. Based on the results of A/B testing, we expect the following improvements to our members’ viewing experience:

- For members with low-bandwidth connections, we will deliver higher quality video at the same (or even lower) bitrate.

- For members with high-bandwidth connections, we will offer the same great quality at a lower bitrate.

- Many members will experience less rebuffers and quality drops when there is a drastic reduction in their network throughput.

- Devices that support VP9 streams will benefit from even higher video quality at the same bitrate.

In addition, many of our members have a data cap on their cellular plans. With the new optimized encodes, these members can now stream more hours of Netflix at the same or better quality using the same amount of data. The optimized encodes are also available for our offline downloads feature. For downloadable titles, our members can watch noticeably higher quality video for the same storage.

Re-encoding and Device Support

Over the last few months, we have generated AVCHi-Opt encodes for our entire Netflix catalog and started streaming them on many platforms. You can currently enjoy these optimized streams when watching Netflix on iOS, Android, PS4 and XBox One. VP9-Opt streams have been made available for a selection of popular content, and can be streamed on certain Android devices. We are actively testing these new streams on other devices and browsers.

Whether you’re watching Chef’s Table on your smart TV with the fastest broadband connection, or Jessica Jones on your mobile device with a choppy cellular network, Netflix is committed to delivering the best picture quality possible. The launch of the new optimized encodes is an excellent example of combining innovative research, effective cross-team engineering and data-driven deployment to bring a better experience to our members.